The path from a project’s starting line to its finish is rarely a straight one, often winding through a landscape of unpredictable variables and unforeseen challenges. For project managers, navigating this uncertainty is not a matter of guesswork but of strategic analysis. The Project Management Professional (PMP) certification exam emphasizes this reality by including sophisticated tools designed to quantify and manage the unknown. Among the most powerful of these is Monte Carlo analysis, a technique that transforms vague estimates into a clear spectrum of probable outcomes.

This article serves as a comprehensive guide to understanding why this specific simulation method is a critical component of the PMP exam curriculum. It explores the fundamental questions surrounding its application, from its basic definition and operational mechanics to its practical uses in both traditional and Agile frameworks. Readers can expect to gain a firm grasp of not just what Monte Carlo analysis is, but more importantly, how to think about it in the context of professional project management and what the PMP exam expects candidates to know.

Frequently Asked Questions about Monte Carlo Analysis

What Is Monte Carlo Analysis in Project Management

At its core, Monte Carlo analysis is a sophisticated computational technique that models uncertainty by running a project scenario thousands of times. Each run, known as an iteration, randomly selects values for uncertain variables like task durations or material costs from predefined probability distributions. Instead of producing a single, deterministic answer to the question, “When will this project be finished?” it addresses a far more insightful query: “What is the probability of finishing by a specific date or within a certain budget?” This approach allows project managers to move beyond single-point estimates and understand the full range of potential results.

The power of this method becomes evident when contrasted with deterministic planning techniques. Methods like the Critical Path Method (CPM) rely on fixed, single-point estimates for task durations to calculate one specific project completion date. While useful for establishing a baseline, CPM does not inherently account for the variability and risk that affect every activity. Similarly, the Program Evaluation and Review Technique (PERT) uses a weighted average of three-point estimates (optimistic, most likely, and pessimistic) but still converges on a single expected duration. Monte Carlo analysis, in contrast, directly embraces uncertainty. It evaluates how the risks associated with numerous variables interact and compound, often revealing that the likelihood of delays or cost overruns is significantly higher than what simpler methods might suggest.

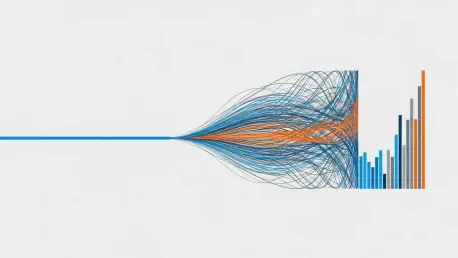

To execute a simulation, several core components must be defined. First are the input variables, which are any uncertain project elements, such as the time to complete a task, the cost of a resource, or the availability of key personnel. Second, each of these variables is assigned a probability distribution, a mathematical model that describes the likelihood of each possible value occurring. The simulation then performs thousands of iterations, generating a vast dataset of possible project outcomes. Finally, these results are aggregated into outputs, most commonly visualized as probability curves (like the S-curve) that illustrate confidence levels and percentile results, such as the P50 (median) or P90 (highly confident) outcomes.

Why Does This Technique Matter for the PMP Exam

Monte Carlo analysis is a cornerstone of risk-based decision-making, a fundamental competency for any PMP-certified professional. Its inclusion on the exam is directly tied to the Perform Quantitative Risk Analysis process, where it serves as a primary tool for analyzing the combined effect of identified individual project risks on overall project objectives. The exam, however, does not require candidates to perform complex calculations. Instead, it rigorously tests their conceptual understanding of the technique, focusing on when its use is appropriate, how to interpret its probability-based outputs, and how to effectively communicate the findings to stakeholders.

The relevance of Monte Carlo simulation extends across multiple Project Management Knowledge Areas, making it a versatile and critical concept for the exam. Within Project Risk Management, it is the premier tool for quantitative analysis. In Project Schedule Management, it is used to analyze schedule uncertainty and determine the appropriate size of contingency reserves. For Project Cost Management, it models cost variability to support robust budget planning. Furthermore, it informs Project Integration Management by providing probability-based forecasts that aid in holistic decision-making. Even within the Agile Practice Guide, its principles are applied to forecast release schedules and plan sprints with a more realistic understanding of team velocity.

To succeed on the PMP exam, candidates must internalize several essential concepts related to this analysis. They need to understand its core purpose, which is to model the cumulative effect of uncertainty and generate a distribution of possible outcomes. A clear comprehension of its inputs (probability distributions), its process (random sampling and iteration), and its outputs (probability curves and confidence levels) is crucial. Key terminology, including “iteration,” “S-curve,” and “confidence level,” must be familiar. Critically, candidates must be able to interpret percentile results; for instance, recognizing that a P50 outcome represents the median (a 50% chance of being achieved), while P80 and P90 figures represent increasingly conservative estimates used for high-stakes planning.

How Does a Monte Carlo Simulation Actually Work

The process of conducting a Monte Carlo simulation follows a structured, five-step methodology that transforms abstract uncertainties into concrete, actionable data. The first step involves the systematic identification of project variables and their associated uncertainties. This requires looking beyond obvious risks to pinpoint any element with a meaningful degree of unpredictability that could significantly impact project goals. These variables can fall into several categories, including schedule uncertainties like task durations and approval times, cost uncertainties such as labor rates and material prices, and resource uncertainties like team availability or equipment reliability. The goal is to create a comprehensive list of the critical variables that will drive the simulation.

Once the key variables are identified, the next step is to define a probability distribution for each one. This involves selecting a mathematical model that accurately reflects the nature of the uncertainty for that variable. The triangular distribution is commonly used in project management because it aligns well with the standard three-point estimation technique (optimistic, most likely, pessimistic). Other distributions, such as the normal, lognormal, or beta distribution, may be more appropriate for different types of variables. For example, a task with a known average and standard deviation might be modeled with a normal distribution. The accuracy of the simulation is heavily dependent on selecting the right distribution and defining its parameters with the best available data, often derived from historical project records.

With the model built, the third step is to generate thousands of random scenarios through simulation. In each iteration, the software selects a random value for every uncertain variable based on its assigned probability distribution. For instance, in one run, a task might take its optimistic duration, while in another, it might take its pessimistic duration, with the most likely value appearing more frequently over time. The computer then calculates the overall project outcome (e.g., total duration or total cost) for that specific combination of values. By repeating this process thousands of times, the simulation builds a rich dataset representing a wide spectrum of possible futures for the project, ensuring that the final distribution of outcomes is statistically sound.

The fourth step is the analysis of the simulation’s output, which is typically presented as a probability curve. For a schedule analysis, this is often an S-curve, a cumulative distribution graph where the x-axis represents the project duration and the y-axis shows the cumulative probability of completing the project by that time. Interpreting this curve involves understanding confidence levels. For example, if the S-curve indicates that the 150-day mark corresponds to a 75% probability, it means there is a 75% chance of finishing on or before day 150, but also a 25% chance of finishing later. This allows stakeholders to align on an acceptable level of risk.

Finally, the fifth and most critical step is to transform these statistical results into actionable insights. This is where the raw data is translated into strategic project management decisions. A schedule analysis might reveal that while the median (P50) completion date is acceptable, the 80th percentile (P80) date is a month later, indicating significant schedule risk. This insight would prompt the project manager to investigate the key drivers of this variability and focus mitigation efforts accordingly. Similarly, a cost simulation showing a large gap between the P50 and P90 budget figures would provide a data-driven justification for establishing a specific contingency reserve, moving beyond arbitrary percentages to a statistically validated buffer.

What Are Some Powerful Applications of This Analysis in Risk Management

The utility of Monte Carlo analysis extends well beyond foundational schedule and cost forecasting. Its unique ability to model the intricate interactions between multiple sources of uncertainty makes it an indispensable tool across the entire risk management spectrum. One of its most powerful applications is in detailed project schedule risk analysis. By simulating variations in task durations, resource availability, and even the logic of dependencies, it produces a probability distribution of potential project completion dates. This allows a project manager to communicate commitments with clarity, stating, for example, that there is a 50% confidence in finishing by May 15th but an 80% confidence in finishing by June 1st. This probabilistic view supports the data-driven sizing of schedule reserves and helps identify the specific activities whose uncertainty has the greatest impact on the project’s overall timeline.

Another critical application is in achieving greater cost estimation and budget reliability. Traditional cost estimates are often single numbers that fail to capture the inherent volatility of expenses like materials, labor, and equipment. Monte Carlo analysis models these variables to generate a probability distribution of the total project cost. The output might show a median cost of $5 million but a 90th percentile cost of $6.2 million, highlighting a significant potential for overruns. This information is invaluable for justifying contingency reserves to stakeholders, enabling financial risk assessments that quantify the project’s exposure to budget shortfalls, and ultimately securing approval for a more realistic funding plan that accounts for known uncertainties.

The technique’s scalability also makes it highly effective for portfolio-level risk aggregation. An organization managing dozens of projects cannot accurately assess its overall risk by simply summing up the risks of individual initiatives. Monte Carlo simulation can model the entire portfolio, accounting for correlations and shared dependencies, such as constrained resources or market factors that affect multiple projects simultaneously. The analysis might reveal that while each project individually has a high probability of success, the collective portfolio has a much lower probability of meeting its targets due to resource conflicts. This insight enables senior leaders to optimize the portfolio by balancing risk, making strategic resource allocation decisions, and setting realistic corporate-level expectations for delivery.

Furthermore, Monte Carlo simulation is an exceptionally potent tool for scope change impact assessment. When a change is proposed, deterministic estimates of its impact on time and cost can be misleadingly precise. By modeling the uncertainty inherent in the new scope—such as technical complexity, requirements ambiguity, or integration challenges—the simulation can provide a probabilistic assessment of its impact. A change estimated to take four weeks might be shown to have a 50% chance of taking 4.5 weeks but a 20% chance of taking over 6 weeks. This provides a change control board with a much clearer picture of the risk being introduced, leading to more informed approval decisions and proactive adjustments to the overall project plan.

How Is Monte Carlo Analysis Used in Agile Project Management

In the context of Agile project management, Monte Carlo analysis adapts to model the specific uncertainties inherent in iterative development. Instead of analyzing individual task durations, the simulation typically focuses on variables like team velocity (the number of story points completed per sprint) and the cycle time for user stories. By running thousands of simulations based on historical performance data, Agile teams can forecast release dates with a range of probabilities rather than committing to a single, fixed date. This probabilistic forecasting helps manage stakeholder expectations by clearly communicating the likelihood of hitting certain milestones.

This application is particularly valuable for release planning and capacity management. For example, a team with a backlog of 400 story points and a historical velocity that varies between 30 and 50 points per sprint can use Monte Carlo simulation to answer critical questions. It can determine the probable number of sprints required to complete the work, showing outcomes like a 50% chance of finishing in 10 sprints and a 90% chance of finishing in 13 sprints. This allows product owners and stakeholders to make informed trade-offs between scope, time, and confidence level, fostering a more realistic and transparent planning process that aligns with Agile principles of adaptability and empirical evidence.

What Role Do Modern Tools Play in Running These Simulations

Modern project management platforms and specialized software have made Monte Carlo analysis significantly more accessible, moving it from the domain of statisticians to the toolkit of the everyday project manager. These tools can support the methodology by integrating probabilistic thinking directly into standard planning workflows. Platforms can be configured to capture three-point estimates (optimistic, most likely, pessimistic) for tasks, which then serve as the inputs for a built-in or integrated simulation engine. The results are often presented in user-friendly visual formats, such as S-curves and histograms, that make it easy to understand confidence levels and probability ranges without needing to delve into the underlying statistical calculations.

Furthermore, the integration of artificial intelligence is automating and enhancing the simulation process. AI-powered platforms can analyze historical project data to automatically fit the most appropriate probability distributions to different types of tasks, removing much of the guesswork from the modeling phase. They can also run simulations continuously in the background, updating forecasts in real-time as new project data becomes available. This dynamic capability transforms the analysis from a static, one-time exercise into a living forecast that reflects the current state of the project. For the PMP exam, however, the key takeaway is that while these tools are powerful enablers, the exam itself focuses on a candidate’s understanding of when and why to apply the technique, not their proficiency with any specific software.

How Many Iterations Are Needed for a Reliable Simulation

The number of iterations required for a reliable Monte Carlo simulation depends on the complexity of the project model, but a general standard has emerged in practice. For most project management applications, running between 5,000 and 10,000 iterations is considered sufficient to achieve a stable and accurate probability distribution. This range typically ensures that the results have converged, meaning that running additional iterations would not significantly alter the outcome. For simpler projects with fewer uncertain variables, a reasonable degree of accuracy can often be achieved with as few as 3,000 iterations.

Conversely, for highly complex programs with a large number of interrelated variables and intricate dependencies, a higher number of iterations may be necessary to fully explore the outcome space. In such cases, running 20,000 or more iterations might be warranted to ensure the resulting probability curves are smooth and statistically robust. Modern software makes running a high number of iterations computationally trivial, so the primary consideration is ensuring the count is high enough to produce a trustworthy result that can be used for critical decision-making without being unnecessarily excessive.

Is Mastering Monte Carlo Analysis Required for PMP Certification

While mastering the intricacies of performing a Monte Carlo simulation is not a requirement for passing the PMP exam, a solid conceptual understanding of the technique is absolutely essential. The exam does not present candidates with questions that require them to perform calculations or build a simulation model from scratch. Instead, it evaluates their ability to recognize situations where the analysis is the most appropriate tool to use, their knowledge of the inputs and outputs, and, most importantly, their skill in interpreting the results to make informed project decisions.

The reason for its prominent place in the PMP curriculum is its direct connection to the core principles of quantitative risk analysis. The certification signifies that a project manager can move beyond qualitative risk assessment (e.g., high, medium, low) to a more sophisticated, quantitative approach that expresses risk in terms of its probabilistic impact on project objectives. Therefore, candidates are expected to understand that when a scenario involves combined uncertainty from multiple sources and the need for a probabilistic forecast, Monte Carlo analysis is the go-to technique. In essence, the exam tests for the wisdom to know when and why to use it, not the technical skill to execute it.

Summary of Key Insights

The inclusion of Monte Carlo analysis on the PMP exam highlights a critical principle of modern project management: uncertainty must be managed with data, not intuition. The technique provides a powerful framework for modeling the real-world variability of project schedules and costs by using probability distributions instead of misleading single-point estimates. This probabilistic approach is fundamental to quantitative risk analysis, a key competency for any certified professional.

For PMP candidates, the focus remains squarely on conceptual understanding rather than mathematical execution. It is vital to recognize when the method is applicable, particularly in scenarios involving the combined effect of multiple risks. Furthermore, interpreting the outputs—such as S-curves and confidence levels like P50, P80, and P90—is a critical skill. These results enable data-driven conversations with stakeholders about risk tolerance and provide a defensible basis for establishing necessary contingency reserves, thereby fostering more realistic and resilient project plans.

Final Thoughts on Probabilistic Planning

The emphasis placed on Monte Carlo analysis within the PMP framework reflected a significant evolution in the project management profession. It marked a deliberate turn away from the false precision of deterministic plans, which often failed to hold up against the complexities of real-world execution. The adoption of this probabilistic mindset was a crucial step toward a more mature and honest approach to risk management.

Project managers who integrated this perspective into their practice found they could have more transparent and meaningful conversations with stakeholders. They were no longer defending a single, fragile date on a calendar; instead, they were presenting a range of possible outcomes, complete with probabilities that allowed for shared, risk-informed decisions. This shift fundamentally changed the nature of project forecasting, transforming it from an exercise in optimism to a strategic analysis grounded in statistical reality.