The widespread narrative of artificial intelligence as a disruptive force that shatters legacy enterprise systems often overlooks a more subtle and revealing truth about its actual impact on modern operations. Across industries, a different story is unfolding: AI is not the sledgehammer breaking well-oiled machines but rather the high-intensity diagnostic light revealing the hairline fractures and hidden rust that have been corroding them for years. As organizations move beyond pilot programs and embed AI into the very heart of their operations, they are discovering that the technology’s greatest power lies in its unforgiving reflection of their own internal weaknesses.

The New Operating Reality: AI’s Deep Integration into Enterprise

Artificial intelligence has decisively migrated from the controlled environment of the research lab to the chaotic reality of the enterprise floor. What began as a tool for discrete, experimental projects has now become a foundational component of mission-critical systems. In supply chain management, AI algorithms orchestrate complex logistics networks, predict demand with granular accuracy, and optimize inventory levels in real time. Similarly, in finance and human resources, AI is no longer a peripheral analytics tool but an active participant in functions ranging from fraud detection and financial forecasting to talent acquisition and performance management.

This shift marks a fundamental change in the operational landscape. AI is no longer an add-on but a deeply integrated layer within the enterprise’s central nervous system, influencing strategic and tactical decisions at machine speed. Its outputs directly trigger procurement orders, adjust production schedules, and even shape hiring decisions. The consequence of this deep embedding is that the performance of the AI is now inextricably linked to the performance of the entire organization, making its reliability a matter of core business continuity.

From Soft Assumptions to Hard Failures: How AI Changes the Rules

The Great Unmasking: AI’s Role as a Catalyst for Revealing Hidden Weaknesses

The primary mechanism through which AI exposes organizational flaws is by converting long-held, implicit assumptions into rigid, explicit requirements for success. For decades, businesses have operated on a cushion of “good enough” data and informal processes, relying on the adaptability and intuition of their human workforce to bridge the gaps. An experienced warehouse manager, for example, instinctively knows which inventory data is unreliable or which supplier delivery times are aspirational rather than factual. These unwritten rules and workarounds formed a crucial buffer against systemic friction.

AI systematically dismantles this human buffer. A machine learning model operates on the data it is given, treating every input with equal, unwavering confidence. It cannot infer context or apply seasoned skepticism. Consequently, when fed incomplete or inaccurate data, it does not hesitate; it simply produces a flawed output with the same authority as a correct one. This process unmasks the true cost of poor data governance and inconsistent operational discipline, transforming what were once minor annoyances into sources of significant, cascading failures.

Stress-Testing Reality: Concrete Cases of AI Amplifying Latent Flaws

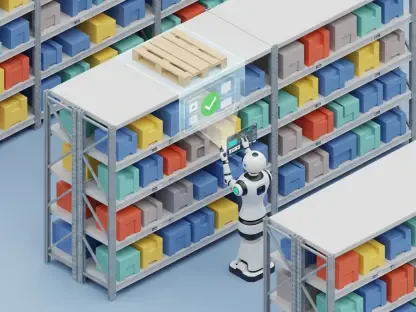

Nowhere is this dynamic more apparent than in warehouse management. An AI system designed to optimize product placement might assume uniform shelf accessibility and consistent staffing levels. When it directs a worker to a physically blocked location or bases its logic on an outdated inventory count, the immediate result is a loss of efficiency. Projections show that a mere 5% data inaccuracy in inventory records can be amplified by an AI optimization engine to cause a 20-30% drop in pick-and-pack throughput during peak periods, alongside a notable increase in workplace safety incidents.

Similarly, in predictive maintenance, an AI model’s effectiveness is entirely dependent on comprehensive historical service records and a clear, management-defined risk tolerance. The model forces a stark choice between the cost of a false positive (unnecessary downtime for servicing a healthy machine) and a false negative (catastrophic equipment failure). Without clean data and strategic guidance, the system is projected to increase maintenance costs without a corresponding improvement in asset uptime. Likewise, AI-driven supplier relationship platforms that score vendors on quantitative metrics can flag a reliable, long-term partner as a risk due to incomplete data, forcing a confrontation between algorithmic logic and established business trust.

The Unseen Costs: Navigating the Human Side of Algorithmic Optimization

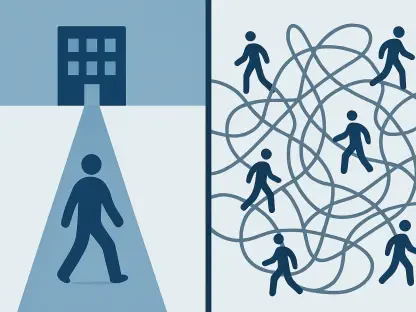

The drive for AI-powered efficiency often carries significant unseen costs, particularly on the human side of the equation. Optimization models are typically designed to minimize easily quantifiable metrics, such as fuel consumption, labor hours, or equipment uptime. These algorithms excel at finding the most direct path to a defined numerical goal but remain blind to critical human factors like employee morale, cognitive overload, and burnout, which are far more difficult to measure.

This narrow focus on engineering out human “inefficiency” systematically erodes the organizational slack necessary for resilience. Human variability and informal problem-solving are not bugs; they are features that allow an organization to adapt to unexpected events, from supply chain disruptions to sudden changes in customer demand. By creating hyper-optimized, brittle systems, organizations inadvertently sacrifice the very flexibility that allows them to navigate crises. The downstream costs—manifesting as higher employee turnover, reduced innovation, and a diminished capacity for creative problem-solving—rarely appear on the initial project’s balance sheet but represent a substantial long-term liability.

Forging a New Contract: Governance, Authority, and Trust in the AI Era

The integration of AI fundamentally reshapes the landscape of organizational authority. Decision-making power, once the domain of experienced human managers, increasingly migrates to algorithmic outputs. This shift demands a new social contract within the enterprise, one that establishes clear governance frameworks for managing the strategic risks that AI surfaces. The technology does not make decisions in a vacuum; it externalizes them, forcing leadership to confront and codify trade-offs that were previously handled implicitly.

This new reality necessitates robust governance to manage the inherent uncertainties of AI. For instance, the strategic implications of a false positive versus a false negative in a predictive maintenance system is a business decision, not a technical one, and must be defined by management. Furthermore, the rise of Generative AI introduces the challenge of “hallucinations”—confidently delivered but entirely fabricated information—which can severely erode operational trust. Establishing protocols for validating AI outputs and defining clear lines of accountability when an algorithm’s recommendation leads to a negative outcome is no longer an option but a prerequisite for sustainable implementation.

The Next Frontier: Generative AI and the Escalating Demand for Maturity

The advent of Generative AI raises the stakes considerably, escalating the demand for organizational maturity. Unlike earlier machine learning models that often operated in an advisory capacity, GenAI is being integrated directly into core systems of record, such as Enterprise Resource Planning (ERP) and supply chain planning platforms. Its ability to generate novel content means it can autonomously draft procurement orders, create production schedules, and communicate with suppliers, making the entire enterprise more vulnerable to the consequences of flawed data or logic.

These challenges are rapidly migrating beyond the supply chain and into the complex domain of people management. AI-powered tools are now used to screen applicants, monitor employee activity, and even suggest performance improvement plans. However, these systems expose flawed corporate assumptions about what constitutes genuine skill and productivity. An AI that filters resumes based on keywords may overlook unconventional but highly qualified talent. Likewise, monitoring software that equates keyboard activity with valuable work creates an incentive for “productivity theater,” ultimately eroding trust and concentrating the real workload on a few key performers, leading to their eventual burnout.

The Mirror Effect: A Call for Foundational Strength Over Technological Hype

The most critical finding from the ongoing integration of AI into the enterprise was that its success is not primarily a technological challenge but a profound test of an organization’s foundational maturity. The technology did not introduce new weaknesses into businesses; it acted as an unforgiving mirror, reflecting the true state of their data governance, process discipline, and operational resilience with stark clarity. Organizations that had long compensated for systemic deficiencies with human ingenuity found those patches swiftly overwhelmed.

Ultimately, the path forward requires a shift in focus from technological hype to foundational strength. Before scaling complex AI initiatives, leaders must first invest in the unglamorous but essential work of cleaning data, standardizing processes, and fostering a culture of operational discipline. AI offered an unparalleled opportunity for improvement, but it could only build upon the foundation that already existed. It revealed that the greatest barrier to realizing the promise of artificial intelligence was not the sophistication of the algorithms, but the readiness of the organization to handle the truth.