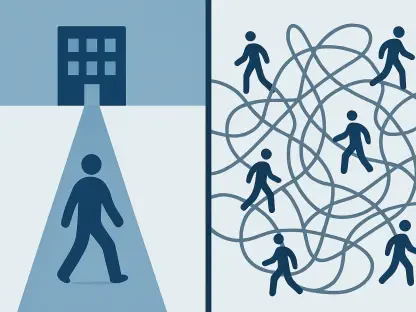

As artificial intelligence models continue their exponential growth in complexity and capability, a significant operational chasm has emerged between their theoretical power and the practical, real-world challenges of deploying them efficiently at scale. The very size of these advanced systems, once a measure of their prowess, has become a primary obstacle to their widespread adoption. In this landscape, the speed at which an AI model can process information and generate a response—its inference speed—is no longer just a performance metric but a critical business imperative. It is the key to unlocking cost-effective, scalable, and responsive AI applications that can serve millions of users simultaneously. Recognizing this industry-wide bottleneck, NVIDIA has introduced a strategic toolkit designed to address this challenge head-on. This initiative offers a comprehensive suite of optimization techniques, providing five core pathways to redefine AI performance and efficiency.

The New Bottleneck Why AI’s ‘Thinking’ Speed Is Holding Back Innovation

The immense capabilities of modern AI have created a new set of expectations, yet the computational demands of these models often make them impractical for real-time applications. The chasm between a model’s potential and its deployed performance is widening, with latency becoming a major barrier to user satisfaction and economic viability. Running massive models requires significant hardware resources, translating directly into high operational costs and energy consumption. Consequently, organizations are finding that while they can train state-of-the-art models, deploying them in a cost-effective and responsive manner is an entirely different and more complex problem.

This operational friction underscores the critical importance of inference optimization. It is the bridge between theoretical AI breakthroughs and tangible, real-world value. Optimizing inference is not merely about making things faster; it is about making AI accessible, scalable, and sustainable. By reducing a model’s computational footprint, organizations can lower hosting costs, improve user experience with faster response times, and deploy powerful AI on a wider range of hardware, including edge devices. NVIDIA’s toolkit directly targets this challenge, offering a structured approach to transform cumbersome models into highly efficient engines of innovation.

Deconstructing the Toolkit Five Pathways to Peak Performance

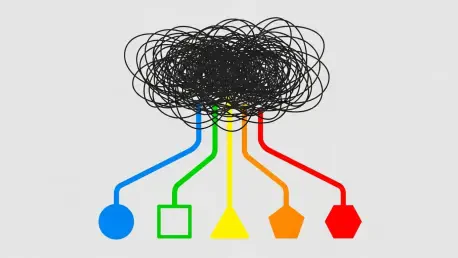

To navigate the complexities of AI optimization, developers require a versatile set of tools that can address different performance bottlenecks without compromising model quality. The toolkit presented offers a layered strategy, providing five distinct techniques that range from quick, post-training adjustments to more profound architectural modifications. Each pathway is designed to tackle a specific aspect of inefficiency, empowering teams to select the most appropriate method based on their application’s unique requirements for speed, accuracy, and deployment resources. These strategies collectively represent a comprehensive approach to building faster, leaner, and more intelligent AI systems.

The Quick Win Strategy Shedding Digital Weight with Post Training Quantization

At its core, quantization is the process of reducing the numerical precision of a model’s parameters, which can be likened to creating a high-quality, compact summary of a very dense text. Instead of storing numbers with high precision, this technique converts them to lower-precision formats like 8-bit integers, significantly reducing the model’s memory footprint and accelerating computation. This reduction in “digital weight” allows the model to be processed much more quickly by the hardware, leading to immediate gains in inference speed and throughput.

The most direct path to leveraging this benefit is through Post-training Quantization (PTQ). This method is designed for speed and simplicity, allowing developers to take a fully trained model and compress it without any need for retraining. The process involves using a small, representative sample of data—a calibration dataset—to analyze the distribution of the model’s weights and activations. Based on this analysis, the model is converted to a lower-precision format, resulting in a smaller, faster version ready for deployment.

However, this convenience comes with a trade-off. While PTQ offers a rapid implementation and substantial performance improvements, the conversion to lower precision can sometimes result in a minor loss of accuracy. For many applications, this slight degradation is an acceptable price for significant latency reduction. For others, particularly in highly sensitive fields where every fraction of a percentage point in accuracy matters, this trade-off may be too great, necessitating more advanced optimization techniques.

Beyond Simple Compression Mastering Accuracy with Advanced Quantization

When the accuracy trade-offs of PTQ are unacceptable, developers can turn to more sophisticated methods that integrate quantization directly into the training process. The next logical step is Quantization-aware Training (QAT), a technique designed to recover the accuracy lost during simple post-training compression. QAT addresses the problem by simulating the effects of quantization during a fine-tuning phase, essentially “teaching” the model how to operate effectively in a low-precision environment. By exposing the model to this simulated quantization noise, it learns to adapt its weights to minimize the impact of reduced precision, often restoring its accuracy to a level nearly identical to the original full-precision model.

For applications demanding the highest possible performance and fidelity, the optimization journey can be elevated further with Quantization-aware Distillation (QAD). This advanced technique combines the principles of QAT with knowledge distillation in a powerful “teacher-student” paradigm. In this setup, a large, full-precision “teacher” model guides a smaller, low-precision “student” model during training. The student learns not only from the training data but also by mimicking the rich output distributions of the teacher, effectively transferring its “knowledge” into a more compact form. QAD stands as the ultimate technique for maximizing performance in highly sensitive applications, ensuring that the final quantized model is both incredibly fast and exceptionally accurate.

Outsmarting Latency How Speculative Decoding Changes the Generative Game

While quantization addresses a model’s size and computational complexity, another significant bottleneck exists in the inference process itself, particularly for generative models like large language models. These models typically produce output sequentially, generating one token at a time, which introduces inherent latency as the system waits for each new piece of information. Speculative Decoding offers an ingenious solution to this challenge by fundamentally changing how tokens are generated.

The mechanics behind this approach involve using two models: the original, large primary model and a much smaller, faster “draft” model. The nimble draft model rapidly generates a sequence of predicted tokens, creating a “draft” of the output. The larger model then verifies this entire sequence in a single, parallel step, which is significantly faster than generating each token one by one. If the predictions are correct, they are accepted, and the process repeats, drastically reducing the overall time-to-first-token and subsequent generation speed.

The most compelling advantage of Speculative Decoding is its non-invasive nature. It accelerates inference substantially without requiring any costly retraining or architectural modifications to the original model. This makes it a highly efficient and disruptive strategy for overcoming the latency challenges inherent in sequential generation tasks, offering a significant performance boost with minimal implementation overhead.

The Architectural Overhaul Forging Leaner Models Through Pruning and Distillation

For those seeking a permanent and fundamental solution to model inefficiency, the most intensive approach involves reshaping the model’s architecture itself. This strategy aims to create a leaner, more efficient model from the ground up, ensuring lasting performance gains. This architectural overhaul is typically a two-stage process that starts with pruning and is followed by knowledge distillation.

Pruning is the systematic identification and removal of non-essential components within the neural network, such as redundant weights, neurons, or even entire layers that contribute little to the model’s overall performance. Once the model has been streamlined, it enters the second stage: Knowledge Distillation. Here, the newly compacted model is retrained, but with a twist. It learns not only from the original dataset but also by mimicking the output of its larger, unpruned predecessor. This process effectively transfers the intelligence of the original model into the smaller, more efficient architecture.

This combined strategy represents the definitive path toward creating a compact and computationally inexpensive model that retains the power and nuance of the original. The resulting model is not just temporarily optimized but fundamentally more efficient, making it ideal for deployment in resource-constrained environments like edge devices or for applications where long-term operational costs are a primary concern.

From Theory to Throughput Your Strategic Blueprint for AI Optimization

The five techniques presented—PTQ, QAT, QAD, Speculative Decoding, and Pruning with Distillation—form a cohesive and powerful decision-making framework. Rather than a one-size-fits-all solution, this toolkit empowers developers to navigate the complex trade-offs between speed, accuracy, and implementation effort. By understanding the strengths and limitations of each method, teams can craft a tailored optimization strategy that aligns with their specific goals and constraints.

The practical blueprint for applying these tools is straightforward and hierarchical. Developers can begin with Post-training Quantization for rapid gains and to establish a performance baseline. If the resulting accuracy is insufficient, the next step is to escalate to Quantization-aware Training or Quantization-aware Distillation to recover that precision. For generative models where sequential output is the primary bottleneck, Speculative Decoding can be applied, often in conjunction with quantization. Finally, for long-term efficiency and the creation of a permanently smaller model, the more intensive path of Pruning and Distillation should be reserved.

Integrating these methods into a continuous development pipeline is the key to building not just high-performing but also economically viable AI systems. By making optimization a core part of the development lifecycle, teams can consistently produce models that are faster, more responsive, and less expensive to operate. This strategic approach empowers organizations to scale their AI initiatives sustainably, turning powerful prototypes into robust, production-ready applications.

The Path Forward Why Efficient Inference Is the Key to Ubiquitous AI

As artificial intelligence becomes more deeply integrated into the fabric of daily life, the industry’s focus is shifting. Raw computational power, while still important, is being eclipsed by the growing need for smart, sustainable efficiency. The future of AI is not just about building larger models but about building smarter ones that can deliver intelligence quickly and cost-effectively.

Toolkits like the one unveiled by NVIDIA were more than just collections of performance-enhancing features; they were critical enablers for the next wave of AI innovation. By making advanced models more accessible and scalable, these optimization strategies broke down the economic and technical barriers that have historically limited their deployment. This enabled a broader range of applications, from real-time assistants on mobile devices to complex analytical engines that can run efficiently in the cloud.

Ultimately, the mastery of inference optimization had become the defining characteristic of the organizations that successfully led the next chapter of the AI revolution. Companies that treated efficiency not as an afterthought but as a core design principle were the ones best positioned to build the ubiquitous, responsive, and truly intelligent systems that shaped the future.